Wittgenstein, Shakespeare, and Cookie Monster

Saturday, September 17, 2011

javascript functions untangled

Most JS tutorials and books are concerned with how functions connect to objects created by them, not with how they connect with objects that are created before them.

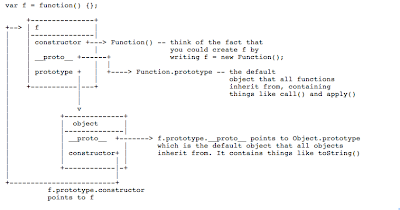

I am really amazed that I haven't been able to find a simple chart (e.g. a UML diagram) of this anywhere. I've found idealized charts that leave out a lot of the details, but I haven't found anything resembling what I've put together, below.

If you know of a better chart, please link to it.

If you find an error in mine, please let me know. I am not a JS expert, though after researching this, I am inching closer to being one.

http://www.asciiflow.com/#4511137586823282615

Note: the __proto__ property isn't an official part of the ECMAScript spec. It was invented by Mozilla in order to give you access to a hidden property of objects -- a property that, according to the spec, you're not supposed to be able to access. (In the spec, that property is called [[prototype]]. Mozilla exposes it as __proto__).

Notes On the Chart

===== f.constructor =====

A constructor (not the constructor property of f, but constructors in general) is an function used to create objects. In JS, you can create a new object in a variety of ways. If you're creating a new function (in JS, functions are objects that have the extra ability of being callable), you can do so this way...

var f = function() {};

or this way

var f = new Function();

The former way is the standard, but it basically evokes the latter way behind the scenes. So Function() (with a capital F) is a constructor -- it's a function that creates other objects (when used in conjunction with the new operator). Specifically, the Function() constructor creates functions (which are objects).

This is why, if you look at the chart, above, the f object (a function) has a constructor property that points to Function(). Function was f's constructor. Function was the constructor function used to create f. Objects in JS (such as f) have constructor properties that point to the function that was used to create them.

===== f.__proto__ =====

(I'm going to write as if __proto__ was a standard property of JS, but see my note above. It basically IS a standard property, but the spec defines it as hidden -- inaccessible -- whereas Mozilla allows you to see it.)

Any object's __proto__ property points to its parent object.

If I have an object called x and I try to access its color property (x.color), what happens if x doesn't have one? Well, before JS starts shouting "Error! Error! Error!" it first checks to see if there's an x.__proto__.color property.

In other words, if x doesn't have color but x's parent has it, JS acts as if x DOES have a color property. When you ask for the value of an object's property, you are really saying "Give it to me if you or any of your ancestors have it."

JS will keep walking up the chain -- x.__proto__.__proto__.__proto__ ... -- until it finds color or until it gets to Object.prototype (the trunk of the tree) and finds that IT doesn't have color. It will THEN say "Error! Error! Error!" (actually, it will just tell you that x.color is undefined).

In a language like java or or c++, x.__proto__.__proto__ would be written as x.parent.parent.

In JS, if you create a simple object...

var x = {};

or

var x = new Object();

That object's __proto__ is Object.prototype, the trunk of the tree. It gives x access to basic methods like toString(). When you type x.toString(), JS goes "Hey! x doesn't have a toString() method. Error! ... Oh, wait.... I should check x's __proto__... Let's see, x's __proto__ is Object.prototype and that has a toString() method. So I shouldn't have said 'Error.' My bad."

f.__proto__ points to Function.prototype. This is because functions need access to some things besides basic Object methods like toString(). For instance, they need access to call(), apply() and a property called arguments.

I didn't include this on my chart, but f.__proto__.__proto__ === Object.prototype. So functions inherit from Function.prototype which inherits from Object.prototype. This allows me to use f.call() (from Function.prototype) and f.toString() (from Object.prototype).

===== f.prototype =====

One of the most confusing aspects of JS is the distinction between __proto__ and prototype.

__proto__, as discussed above, is the parent of an object. Whereas prototype is the object that constructor functions will inject into the __proto__'s of the objects they create. (If that doesn't make sense, keep reading._

Note that if you type this...

var x = {}; //Or var x = new Object();

... and examine the result in Firebug or Chrome's JS console, you'll see that x has a __proto__ but no prototype. That's because only functions have prototypes, because only functions can be used as constructors, and only constructors inject values into the __proto__ properties of objects they create.

It's VERY confusing to say that only functions have prototypes, because -- if we use the word "prototype" in a different way, as I've done many times, above -- all JS objects have prototypes, meaning that they all have parents.

But remember that an object's parent is stored in its __proto__ property, NOT in its prototype property (most objects, not being functions, don't have a prototype property).

Even a function's partent isn't stored in its prototype property. A function's parent is stored in its __proto__ property, as is the case with all other objects. So it's not the case that only functions have prototypes (parents); it's the case that only functions have PROPERTIES called "prototype," and they DON'T store a pointer to their parents in those properties.

I wish JS (or mozilla) would rename __proto__ to parent and rename prototype to parentOfChilrenMadeFromMe.

So, think of f.prototype as f.parentOfChidrenMadeByMe.

If you type this...

var x = new f();

... this happens behind the scenes:

x.parent = f.parentOfChildrenMadeByMe

Well, actually, that's...

x.__proto__ === f.prototype

So what is contained in f.prototype (what value does it inject into the __proto__ properties of the objects it creates)? As my chart suggests, f.prototype is an object that contains __proto__ and constructor properties.

===== f.prototype.constructor =====

This points back to f. Why? Remember, f.prototype is a "gift" that f (a constructor function) gives to all the object it constructs. So, if x is one of those constructed objects...

x.__proto__ === f.prototype

So if f.prototype.constructor is f, then f.prototype.constructor is f, which means x.__proto__.constructor is f, too.

Remember, __proto__ is "parent," and you can access a parent's properties through its child. So, since x is a child of its own __proto__ (its own parent), that means that x.constructor is ALSO f. Or rather, when you ask for x.constructor, JS doesn't say "Error! Error!" It first checks to see if x.__proto__ has a property called constructor, and when it finds that it does, JS returns its value as if it's stored by x.constructor.

So by setting f.prototype.constructor to f, JS has found a sneaky way for x (or any object f constructs) to know who constructed it.

===== f.prototype.__proto__ =====

So who is the parent of f.parentOfChildrenMadeByMe? In other words, if I type...

var x = new f();

... x's parent (its __proto__) is f.prototype. (If you don't understand why, read the above section called "f.prototype."

But what is x's parent's partent? It's f.prototype.__proto_, and THAT is Object.prototype, the trunk of the tree -- the object that gives all its children core methods like toString().

How Do I Know All This?

I wish I could site sources, but I cobbled this together via many hours of googling, and by combining information from dozens of sites and books, and I didn't save all of my steps. But I tested my understanding with this script, checking the output in Chrome's JS console.

<html>

<body>

<script>

var f = function() {};

console.log( "f.__proto__ === Function.prototype --> ", f.__proto__ === Function.prototype ); //true

console.log( "Function.prototype --> ",Function.prototype ); //displayed by Chrome's console as Function Empty() {}

console.log( "f.__proto__.__proto__ === Object.prototype --> ", f.__proto__.__proto__ === Object.prototype ); //true

console.log( "f.constructor --> ", f.constructor ); //function Function() { [native code] }, e.g. Function()

console.log( "f.prototype --> ", f.prototype ); // Object

console.log( "f.prototype.__proto__ === Object.prototype --> ", f.prototype.__proto__ === Object.prototype ); //true

console.log( "f.prototype.constructor === f --> ", f.prototype.constructor === f ); //true

console.log( "f.__proto__ === f.prototype.___proto___", f.__proto__ === f.prototype.___proto___ ); //true

var x = new f();

console.log( "x.__proto__ === f.prototype --> ", x.__proto__ === f.prototype ); //true

console.log( "x.__proto__.__proto__ === f.prototype.__proto__ === Object.prototype --> ", x.__proto__.__proto__ === f.prototype.__proto__ ); //true

</script>

</body>

</html>

If you're trying to understand JS, I urge you to play with the script, look at my chart, and read through this post until you "get it." The pay-off for me has been immense.

Friday, August 12, 2011

why directors suck

Stephen Sondheim just ripped Diane Paulus a new asshole. (NY Times story) Paulus is directing a Broadway revival of "Porgy and Bess," and she -- and her colleagues -- have chosen to adapt the play almost beyond recognition. Sondheim's letter to the "Times" sparked an electrical storm of comments in newspapers, magazines, blogs and in person, about the state of the theatre and directors. A (female) Facebook friend of mine, mentioning Paulus and "Spiderman's" Julie Taymor, complained that women directors in particular are crapping all over the theatre. Here's my response

I don't think women directors are especially bad. I think DIRECTORS are especially bad -- most directors, regardless of their gender. And it really won't solve anything to get rid of directors, because -- paradoxically -- even though the role of director is new, it's also ancient.

SOMEONE has always been at the helm, whether it was an actor-manager, a committee of actors, a producer, or the playwright. And we're living in a fool's paradise if we think just letting writers direct their own plays (or letting actors do it) will solve the horrible problems plaguing the theatre these days. When I see writer/actor-directed shows, I see the same bullshit I see in director-directed shows. Here are some of the reasons why directing is in such a dire state:

1. No education. How do you learn to be a director? It's not taught. Sure, there are MFA programs (I went to one), but they really don't teach directing. Generally, they give students a chance to direct without giving those students much guidance -- certainly no coherent guidance. In my MFA program, I got a lot of feedback (this worked; this didn't), but little help understanding what my role was, and little help developing techniques and procedures. Which leads to...

2. most directors not knowing what it is they're supposed to be doing. Whatever their job is, it's something they've made up -- or pulled out of their asses. A few stumble upon a procedure that works; most don't. There's almost no apprenticeship going on, so each new generation of directors start from scratch, reinventing wheels, usually in inferior ways. When there's a occupation that has no parameters, that leads to...

3. practitioners feeling insecure. Many directors have Impostor Syndrome. How does one fight that disease? By doing too much. It's the exact opposite of how one SHOULD fight the disease, but when you're suffering from Impostor Syndrome, you have an overwhelming urge to proclaim "I know what I'm doing! Look! Look at me doing my job!" Which leads to...

4. directors doing noticeable things. Usually, it's best if the audience doesn't think about the director at all. He's succeeded when the story just seems to tell itself. People go to "Porgy and Bess" to see "Porgy and Bess" -- not to see Diane Paulus. But, if Paulus is like most directors, her Impostor Syndrome will DEMAND that she make the play about her. So she'll shit "concept" all over it, and she'll make sure that her shit stinks so strongly that it's impossible to ignore the stench.

If there's any truth to the claim that women directors are especially shitty, it may be because women -- since they've had to fight and claw their ways into positions of power -- often have a strong need to say "Look at me! I made it! I'm here!" It's great for women and society that women are able to do that, but no director, male or female, should ever be saying that.

5. Back to education: theatre is a text-based craft. Why? Because we can't compete with the visuals on television and in the cinema. What we CAN do is give actors and audiences a distraction-free environment within which they can confront a text and form a relationship with it.

But here in the 21st Century, our educations are not text based. When I (briefly) taught directing, I learned that college-aged students don't know how to research, analyze scripts, work with actors or understand rhetoric. They have virtually no knowledge of history -- theatre or world history. And they are not well read. Contemporary politics has made them shun the Dead White Males that, for good or ill, make up the bulk of theatre's historical cannon. I don't blame young students for their lack of knowledge. I blame our high schools and colleges.

6. Most theatre directors can't answer this vital question: "Why am I choosing to direct plays and not films?" ANY director who can't answer that question in a meaningful way has no business directing plays. None.

And the answer -- for a director -- can't be "because I like the excitement of a live audience." That's a good answer for an actor, but not for a director, because it can't inform the craft of directing. That answer can't help directors make meaningful choices.

Our theatre is filled with directors who, for whatever reason, stumbled into the theatre but have no idea why they're there. They grew up -- like most of us did -- with a film-based vocabulary, and consciously or unconsciously, they are trying to direct movies on stage. Here's an acid test: I offer you enough money to direct 10 feature films or direct 10 Broadway shows, with no restrictions on what you can do once you start directing. You MUST choose either the films or the shows, not a mixture. Which do you choose to direct? Why?

7. Finally, all directors need to ask themselves "Do I like going to see plays?" I've met an alarming number of people in the theatre who sheepishly admit, "You know, I like doing the work, but I really don't like going to the theatre." I suspect that's okay for an actor or costume designer to say, but it's not okay for a director to say. If that's the way he feels, he needs to do something else!

Why? Because a big part of his job is serving the audience. The actors are, rightly, serving their characters. But the director needs to understand the audience's needs and cater to them. It is true that directors should direct for themselves; but what makes that process work is that directors -- when the stars align -- are smart audience members. They instinctively know what moves and challenges an audience, because they've often been moved and challenged as audience members.

When they direct, say, "Hamlet," the should say to themselves, "If I went to see this play, what would I like to see?" If their answer is, "I wouldn't go see it in the first place, because I only like working on plays, not watching them," then the greatest service they could do the theatre would be to get out of it. ASAP!

Sunday, July 17, 2011

Is Belief a Choice?

"When you make the choice to believe in God, you..."

I get confused when choice and belief cohabitate in the same sentence, because I've never been able to choose to believe (or disbelieve) anything. Yet so many people talk about beliefs as if they're neckties, as if choosing atheist or theism is as easy as reaching into a closet and picking the one with red stripes or the one with paisley.

When evangelists have tried to convert me, they've acted as if they just have to convince me that believing in God is healthy -- that I'll be happier and have a more meaningful life if I just choose to believe. What they never explain is, assuming I agree with them, exactly how I'm supposed to flip the atheist-to-theist switch. No doubt I'd be happier if I believed I was a millionaire, too. But I just can't seem to forget my actual, pitiful bank balance.

To start untangling this, I'm going to grapple with the word "belief," because I suspect some of my confusion comes from different people using the word to mean subtly different things. When you and I both say the word "belief" in the same conversation, are we talking apples and apples or apples and oranges?

So I'll define what I mean by belief. If you mean something different by it, that's fine. Please don't tell me that I'm wrong about what belief means. I'm not claiming that I'm right. I'm just defining belief in a specific way, to explain how *I* use the word -- and one thing I AM right about is how *I* it. If you want to use it some other way, go forth, my son, and do so. You have my blessing. If you think my way of using it is silly or pointless, quit reading this once you come to that conclusion.

I'll start with a couple of things I DON'T mean by belief: I don't mean practice. If someone ACTS as if he believes, that's not enough to constitute belief in my mind. I'm sure there are plenty of religious people who, on some level, are unsure of whether or not God exists, but they go to church, read the Bible, etc. And there are probably "atheists" who are really agnostics, but they self-identify with the label "atheist," read books by Richard Dawkins and so on.

(Daniel Dennett makes the distinction between believing in God and believing in belief in God. The latter means thinking that belief in God is a good thing and cheerleading yourself towards it, whether you actually believe God exists or not. And the interesting thing, to me, is that people confuse the two sorts of beliefs. They really feel like believing in belief is the same as believing. And on an emotional level it is: enthusiasm is enthusiasm.)

If when an evangelist tells me to choose, he means "choose to go to church and live your life as if you believe, whether you actually do or not," I could do that (though I might choose not to), but that's not what I mean by belief.

Please note that I am not belittling folks who believe in that sense, nor am I claiming they're lying. There are good reasons to be an "in practice" believer. For instance, if someone WANTS to believe but doesn't yet, he might go through the motions in hope that doing so will evoke belief. (Belief is also not necessarily a steady state. It may wax and wane. Meanwhile, when it's in the wane stage, one can participate in rituals -- clinging to them until a wandering belief returns to the hearth.)

I can also see why a practicer might call himself a believer just to simplify conversation. What's easier to say? "I'm a Christian" or "I'm a guy who goes to church every Sunday, reads the Bible, wants to believe, often does or almost does, sometimes has doubts, but is hoping to believe permanently?" Truth is, in most conversational circumstances, the latter would be inappropriate, even if it's technically true. It would be too much information. "Hey! I just wanted to know whether to get you a Christmas card or a Chanukah card, buddy. I didn't want your life story!"

Finally, it's possible to just not think about it all that much: "I need God in my life, I heard some arguments for his existence once, and they seemed convincing, I go to church regularly, so ... I'm a Christian. I believe in God."

With the greatest respect to people who believe in that sense, that's not what I mean by belief. To me, someone who is trying to believe -- or someone who thinks belief is a good thing -- does not qualify as a believer (without some other mindsets also being present).

Though this doesn't capture the sincerity with which many people embrace rituals, creeds, mental frameworks and lifestyles, for lack of a better term, I'm going to call this sort of belief "acting," to distinguish it from what I'm calling "believing": "Mike is acting like a Christian." (If this sounds insulting to you, note that I'm a theatre director. I see how fervently actors throw themselves into their roles, and I take acting very seriously. When an actor plays Hamlet, he reaches for a sort of truth. Still, he's not actually Hamlet.)

The second thing I don't mean by belief is "a good guess." One might say, "I believe it's going to rain tomorrow," meaning "I looked at the weather report, and they presented evidence that it's LIKELY to rain tomorrow. If I had to place a bet, I'd bet on rain. But I am aware that it might not rain."

That sort of belief connects smoothly with the first sort I described, the ritualistic sort. "Based on the 'belief' that it's going to rain tomorrow, I'm going to carry an umbrella." In other words, even though I don't know for sure that it's going to rain tomorrow, I'm going to live my life as if it's a fact. I'm not going to live my life as if it MAY happen. (How would I do that, anyway?) I'm going to live my life as if it's GOING to happen.

(When the weatherman says "There's a 90% chance of rain tomorrow," do you think of tomorrow as a rainy day or as a day in which it's likely to rain? I would love to conduct an experiment that tells me how the majority of people think about things like this.)

Here's what makes this sort of belief not the kind I'm talking about: what happens when it doesn't rain tomorrow? Do you feel like "Okay, the weather report was wrong?" or do you feel like "Oh my God! How is this possible? I'm in the Twilight Zone! I've passed through the looking glass!" I'm guessing the former.

The beliefs I'm talking about are ones the mind grasps as FACTS. They might be things that one intellectually knows might be false, but they must be things that one can't emotionally accept as false -- or at least things that would be very hard to emotionally accept as false. (For some people, an example of this is Free Will. They agree, logically, that it can't exist, but they can't shake the feeling that it does.)

In the case of such beliefs, it is not necessarily because one NEEDS to believe -- though that might be the case. You might have a hard time accepting that a fact isn't true simply because you're senses are constantly bombarded with the impression that it IS true. This happens at magic shows. One knows the conjurer is "just doing tricks," but they SEEM real. If you don't at least feel like they're real, the show is no fun.

I am not saying that most people believe David Copperfield actually has magic powers. They don't. But that feeling -- the feeling that magic has happened, even if you know it hasn't -- is a necessary component for what I'm calling a belief. (It's not sufficient. More is needed. But it must be present.)

To distinguish that sort of belief from "I believe it's going to rain tomorrow," I'm going to call the latter "a good guess." So we have acting, guessing ... and believing.

Believing -- as I'm defining it -- is accepting something as true (with or without evidence) AND falling into a mental state in which it's really hard to UNaccept the belief. The belief becomes axiomatic. It's not just the case, as in acting, that you live as if you believed the claim to be true. In the case of beliefs, your MIND behaves as if the claim is true. In other words, you don't generally question the claim. Maybe you will question it when new evidence arises, but your default state is to accept the claim in the same way that you accept the fact that the sun will rise tomorrow. It doesn't occur to you that it might not, and you'd be floored if it didn't.

Let's say that I proved to you -- conclusively -- that sidewalks don't exist. You might totally understand (and even accept) the logic and INTELLECTUALLY believe me, meaning that you couldn't refute it. Still, you have the constant sensation of walking on a sidewalk.

That feeling not enough to make "it sure feels like there's a sidewalk" a belief (in the sense that I mean) because you might truthfully think "I'm hallucinating." In order to qualify as a belief, a claim must seem to be true intellectually AND be unassailable (or at least without only assailable with great difficulty) emotionally.

Given that, let's return to the question of whether or not one can choose beliefs: I am an atheist for several reasons. First of all, I am convinced by the logic put forth by many scientists, skeptics and philosophers that we have no evidence for God. Don't worry about whether those people have lead me down the path of truth or deluded me. That's not important (to what I'm writing about here). The point is that, right or wrong, they've intellectually convinced me.

On top of that, I've never experienced God on a visceral or emotional level -- not even for a second. (I've never had the feeling of someone watching over me or of an intelligence "out there", even as a fiction.) So the claim "God exists" doesn't just hit me as intellectually false, it also FEELS similar to "you have three hands." I have never in my life had the sensation of having three hands. Could I choose to believe -- really believe -- that I have three hands?

The question is this, can I CHOOSE to go from the state I'm in to one of belief in God, as I've defined belief. And, if so, how? Let's say you convince me that I'd be a happier person if I was a believer. I accept your logic and WANT to believe. How should I make myself do it?

The truth is, I'm a tough nut to crack. I am guessing people exist on a spectrum, credulous to obstinate. "Obstinate" isn't really the right word, because it implies volition. I don't choose to be obstinate. I just don't know how to budge from my atheism. I don't know how do find the arguments I find logical illogical and I don't know how to give myself the visceral feeling of God's presence. Still, I'm guessing that some people would have an easier time than I would.

Someone suggested that to me recently. He said, "I think most people can choose their beliefs. You're just an exception." Though I agree with him that there's a spectrum and I'm on an extreme end of it, I think the truth is a bit more complicated than my friend suggested.

Given a particular truth claim, there are (at least) three things that influence the likelihood of someone believing in it: (1) the person's location on the "credulity scale," (2) the nature of the claim itself, and (3) it's relationship with other facts/beliefs the person already holds.

Let's try a thought experiment. Imagine a shoebox-like container that's' divided into two chambers, left and right. I put a red ball in the left chamber and leave the lid off the box. I tell you to peer down into it. You can clearly see that the ball is in the left chamber. I ask you to choose to believe that it's in the right. Can you do it?

Where someone lies on the credulity scale might have some bearing on his ability to make himself believe a direct contradiction of his senses, but I'm guessing all but the most extremely credulous will find it impossible to believe (in my sense of the word) that the ball is in the right chamber.

Beliefs aren't easily malleable when they clash with (or are buttressed by) direct sensory experience.

Now imagine that I put the the lid on the box. I don't shake the box or manipulate it in any way. I just drop the ball in the left chamber (and you see me do it) and then cover the box with a lid. I then ask you to believe the ball is in the right chamber. To me -- in my extreme position on the credulity scale -- this belief switch would be as impossible as in the former case, when the lid was off. But I'm betting it would be slightly easier for some people, especially after a passage of time, when seeing the ball dropped into the left chamber has become a dim memory.

Finally, imagine that I put the ball in one of the two chambers without you seeing me do it. I then put the lid on the box and seal the box closed in a way that makes it impossible to open. Let's assume I have magic powers and I can make it totally impossible to open (or see into with x-rays) -- even with the strongest tools available. You can never know which chamber houses the ball. Can you choose to believe it's in the right one?

(You can live your life as if it's in the right one, but, remember, that's not what I'm talking about.)

I'm guessing that, at this point, a lot of people can -- maybe even people who are halfway between credulous and obstinate on the Credulity Scale. Most minds hate mysteries and will resolve them if possible.

Someone even suggested to me that while it's not always possible to choose one's beliefs in all cases, it is ALWAYS possible when faced with an unknowable. The form this usuall takes in religious debates is to claim that, since we can't conclusively prove that God does or doesn't exist, theism/atheism is a choice.

Before I was an atheist, I had a brief period of agnosticism. Many people, usually theists, told me that "agnosticism is a copout." This confused me no end. To me, it just seemed descriptive. Copping out suggests not living up to responsibilities, but I really didn't know whether or not God existed. If it was my responsibility to know, how on Earth was I supposed to make myself know? Can you force yourself to know who is going to win next year's lottery? No. You simply don't know and can't know.

I now wonder if people thought I was copping out because, for them, when faced with an unknowable, they are able to flip into belief or non-belief. And they assumed I was able to do that too and was just refusing to admit to how I'd flipped -- or maybe they thought I was refusing to take some little mental step that would have allowed me to flip. (What step would that be?)

Of course, to a lot of people, there's a political element to religious debates. Agnosticism may seem, to many, like fence sitting. "Either join the seperation-between-church-and-state team or the Intelligent-Design team, Dammit!" That's an example of acting: it has more to do with "living your life as if" than it does with the sort of belief I am talking about.

Cheerleading is also an example of this, as is "dressing as if you have the job." I've always been fascinated by the claim that football players convince themselves they're going to win, and that their belief gives them an edge. I have no doubt that it does. I'm curious about whether they believe they are going to win in the sense I'm talking about (and feel like they're in the Twilight Zone if they wind up losing) or whether they're cheerleading: they know they might possibly lose, but they're trying to drown out that possibly by shouting "I think I can; I think I can; I think I can..."

There are many reasons I suck at sports, but one of them is my inability to whip myself into a mental state. While everyone else on my team is saying, "We're GOING to win," I'm thinking, "Well, of course, I hope we do, but we might lose."

I can't possibly choose to believe the ball is in the right chamber, even if there's no way I can ever know which chamber it's in. In that case -- maybe due to my location on the Credulity Scale -- I am stuck with "I don't know." It's not a copout. It's an accurate description of my mental state.

Why am I stuck with "I don't know" while (some? most?) others are able to believe that the ball is in the right chamber? I suspect it has to do with ability-to-forget or ability-to-not-think-about. (Some prefer the term denial, but I don't like it. It sounds too conscious to me. If you're working to deny a proposition, then at least on some level, you believe or partially believe it. You are straining to thwart that belief: cheerleading. Whereas forgetting is a gentler, less conscious approach. If you successfully forget something, it's just gone from your brain. You don't have to work to keep forgetting it.)

Assuming you're a rational person, you know that the ball could be in the left or the right chamber. And you also know that, since the box is sealed forever, there's no way of knowing which chamber it's in. Essentially this fact is in your mind: it's impossible to know.

Meanwhile, you're trying to believe that you DO know -- that you know the ball is in the right chamber. So you're trying to reconcile these two ideas:

1. It's impossible to know which chamber the ball is in.

2. The ball is in the right chamber.

If you can somehow forget the first idea -- or ven greatly weaken it -- you many be able to accept the second one. In this case, that's what choosing-to-believe would mean: choosing to forget (or not think about) idea one and remember idea two. And the question for me is, how can I do that? "Just don't think about it" isn't a useful answer to someone who doesn't know how to do that.

That might be the crux of what makes belief an non-volitional act for me: I don't have much control over my thoughts. I am, and always have been, the kind of guy who lies awake and worries. I can't (or at least I don't know how to) just stop worrying. I totally agree with the logic that worrying doesn't solve anything: it's wasted energy. But agreeing with that does nothing to stop me worrying. I have never felt like I'm willing my thoughts. To me, thoughts are things that HAPPEN to me. They come into my head unbidden.

Try the bent-spoon test: imagine a spoon that's bent. Can you unbend it? Can you keep it unbent? I can't. Or rather, it's anyone's guess whether I'll be able to do so in a particular imagining session. Sometimes the spoon just "wants" to be bent, and no amount of mental unbending will change that. I can imagine myself unbending it, but then the image of the bent spoon pops back into my head.

Try to not imagine an elephant.

My problem with believing that the ball is in the right chamber is that, as far as I can tell, it's impossible for me to forget that I don't know which chamber it's in. When I think of the ball, that fact -- the fact that I can't know -- is continually active in my brain. It's as if my brain contains a blackboard with "you can't know" written on it, and if I try to erase the words, they come right back.

Wednesday, July 13, 2011

politics

Let's say that Sam is a guy who cares deeply about issues. He doesn't care about parties or elections or Red vs Blue. He just thinks that women should have the right to choose whether or not to have an abortion. Or be believes abortion is murder. It doesn't matter. There point is that there are issues on which he has profoundly-felt stances.

His stances might be based on years of study, knee-jerk reactions or biases introduced during his childhood. The point is that, right or wring, Sam strongly (and honestly) believes what he believes.

He lives in the USA, so in order to push his convictions into action, he has to take a side. He has to start voting for Democrats or Republicans. If he's like most people, he will discover that one of the two parties tends to represent his views more than the other. So, after some time, he will find himself identifying as a Republican or a Democrat -- as a member of SOME group.

At that point of identifying, he will be A MEMBER OF A FAMILY. And that changes everything, because family members act in some very specific ways, both in support of other family members and in defense against others. (Ask the Capulets and the Montagues!) Family members act in ways that don't necessarily have anything to do with issues. They act in support of the family. The family's survival is it's own end.

This is something we rarely discuss when it comes to politics. Instead, we posit ourselves as rational beings, only concerned with issues. We don't do this when it comes to biological families. It's not odd to hear a parent say, "My son may have committed that crime, but he's my son! I'm standing by him." But it IS odd to hear someone say, "My party is wrong about this issue, but it's my party and I'm staying by it." Yet that's how people often behave. That's how people behave in families, biological or not. We evolved to behave that way.

Jane never cared about issues as much as Sam. She just grew up as Republican -- or as a Democrat. That's what everyone around her was. Being a member of her party makes her feel "at home." It makes her feel like she's a part of something bigger than herself. When she goes to a strange city, she knows she has something in common with the strangers there, as long as they're members of her party. (A Liberal can tell a Sarah Palin joke to a room full of total strangers, and as long as those strangers are fellow Liberals, he'll get a good reception.)

Mike's parents are Democrats (or Republicans) and, in a rebellion against them, he embraced the other party with a passion.

Those two parties exist and, as with religious denominations, they recruit.

As I've discovered, in America, if you opt out of politics, people chastise you. You're SUPPOSED to be part of the process. If you aren't, you're not doing you're duty. You're being selfish. And while it's possible to be political without affiliating yourself with a party, that's a hard road -- especially if you want to get things done. And the parties are there, waiting, with open arms. And your friends are in them... It's hard not to join. (And it's seen as a GOOD thing to join.)

One way or another, Sam, Jane and Mike get sucked into a team, a side, a family. And our whole political system is infused language from the military and from sports: wining, losing, defeating... If they let the other team win, their team loses.

Ensuring a win for your team means working to make it win. You're not going to be good at that if you half-heartedly embrace your team -- if you dwell on its problems or on ways you disagree with it. Imagine two boxers pitted against each other in the ring, one who says and thinks "I'm the greatest!" and another who thinks, "Well, I have some strong points, it's true, but my legs are a little wobbly and I didn't get enough sleep last night." Who are you going to bet your money on?

Teams weed out players like that. As an experiment, try getting together with your Democrat or Republican friends and talking about everything that's wrong with your party. See how long you keep those friends. I've tried it. It doesn't go well. (If you're really brave, try talking to your friends about the humanity of the people in the opposite party. That won't go well, either.)

Team membership also means depersonalizing the other team. This happens in war and it happens in sports. It's necessary. It's really hard to win if you're constantly thinking about the other side as fully-realized people -- people who fall in love, are scared to die and who adopt labradoodles. And your team will help you dehumanize the other team. A huge part of being a team-member involves ritualized mockery of the other team (Sara Palin jokes) and references to the other team as being evil.

Now, I'm not claiming that Sam, Jane and Mike are sheep. Even while they're ensconced in their families, they still care deeply about issues (at least Sam does). If they are lucky, their beliefs fall 100% in line with those of their party. But that rarely happens.

If a Republican can manage to think 100% rationally for five minutes -- or if a Democrat can -- he will have to admit that sometimes, once in a while, the other party must be right about something. But it's hard to be a Democrat and say, "My party is wrong about this issue." It's hard to be a Republican and say, "The other party is right about this issue." It's hard to even keep an open mind that the answer might be YES if your party says it's NO.

But that's what we need in order to really solve problems efficiently: open minds, untainted by biases such as party loyalty. Loyalty to a party has no impact on what is ACTUALLY the case with the environment or how many people will live or die if we outlaw handguns. If the Republicans are right about hand guns, then the Democrats are wrong, no matter how loyal they are.

If Sam is deeply loyal to his party and yet suspects it might be wrong about something, he finds himself in a painful state of mind. If you've ever had a beloved parent or sibling who has behaved badly, you know something about how painful this can be. It's Cognitive Dissonance -- a state that's so painful to most of us that we do anything to rid ourselves of it.

The common ways to fight Cognitive Dissonance are by lying, excusing, justifying and ignoring. If being a thinking person and a loyal team member leads to Cognitive Dissonance for many people, we should expect many people to lie, excuse, justify and ignore.

Take a close look at what happens when a politician is caught in the middle of a scandal. People on his team will excuse it. People on the other side will shout to the hills about how evil and corrupt the politician is, even while they'd be making excuses if it was a member of their team. Both sides rightly accuse the other side of being hypocrites, which, ironically, is hypocritical, because the accusers are hypocrites, too.

Team spirit is stronger in the US now than it's ever been in my lifetime -- I'm guessing it's nearing Civil War levels, which should be frightening to all of us. We even have team colors, now. There was a time, not too long ago, when -- even though there were two parties -- when we didn't talk about red states and blue states. To what extent is that rhetoric merely descriptive? To what extent does it reinforce a divide, widening it and making it deeper?

In a way, though, Civil War would be a relief. I often feel like saying to Democrats -- and Republicans -- "If you REALLY feel that the other side is evil -- Hitler evil -- then why are you sitting around? Shouldn't you be forming a militia?"

The people around me are clutching tighter and tighter to their teammates and getting angrier and angrier at the other side. What next?

how to think

1. Step in and step back, step in and step back... For instance, if the topic is abortion, think about its ramifications on a specific 16-year-old girl, maybe an actual girl that you know. Then think about its ramifications for society.

Each time you add a new detail to your mental construction, step in and step back again.

This is a really useful process for artistic creation: (step in) what is the color of the grain of sand that the hero is rubbing between his fingers? (step back) What is the story I'm trying to tell?

2. Think sensually. Humans are sensual creatures: we live through taste, touch, smell, seeing and hearing -- also through fucking, fleeing and fighting. How can you tie your idea in with your lizard brain? Or how can you free it from your lizard brain?

The more abstract your topic is, the more it will benefit from sensuality. Is there a way to explore that equation or philosophical idea via sound or color? Do it!

Note: geeky folks in their teens and twenties have a very hard time with this. (I sure did!) They tend to want to live in a world in which everyone is a sort of Mr. Spock and the only thing that exists is pure reason. That's not the real world.

3. Think socially. Even before you fully understand an idea, try to communicate it to someone else. Take note of the parts that were hard to explain and the parts the listener didn't understand. Work on those parts. Brainstorm to come up with sharp examples and metaphors. Keep rethinking and rethinking how you communicate, even if you feel you have come up with the perfect explanation.

Note: PowerPoint is evil. When I was teaching, I forced myself to redraw the same charts over and over for each class, and I found that this process helped me refine them.

Also, collaborate! Get a core group of people to brainstorm with you, and, every once in a while, add a new person into the mix.

4. Do. If it's possible to do it, do it -- don't just think it. If you're sure you know what the result will be, do it anyway. The human brain plugs more easily into the concrete than the abstract.

When I work with actors, and they do a scene as if they're character is depressed, I often say, "Try it as if your character is happy." They often say something like, "That won't work, because..." I say, "You're probably right, but please try it, anyway."

The sometimes worry that I'm asking them to try it because that's secretly the way I want them to do it. They feel it's wrong, and they balk at trying it, because they think if they do, I'm going to force them to do it that way forever.

I work to calm their fears. I have no desire to force them into anything. I just have a profound belief that we don't know unless we try. And the more sure we are that we know, the more we need to try.

The only time it's no appropriate to try is when doing so is impossible or prohibitively costly in terms of time or resources.

5. Dig deep. Like a small, annoying child, keep asking "but why?... but why?... but why?..." Keep digging until you get to the foundation, the axiom, the article of faith, the unknowable. Note: the "unknowable" is not the same as "I don't know." What you don't know, you can research. If you can research it, you haven't reached the foundation yet. The foundation is what all your ideas lie on top of, so it's worth knowing what it is and what it implies.

6. MASTER research tools. Note: that includes but doesn't stop with google and wikipedia. If you've ever said, "I googled it and couldn't find the answer" and then stopped trying, that's a fail.

7. Learn more than you have to. If you're trying to learn how to bake bread, also learn how to bake cakes and muffins. Confidence comes from feeling some slack around the edges of your knowledge. If you feel like one question could slam you into a wall of ignorance, you're not there yet. Your knowledge needs breathing room.

8. Push yourself to failure. You learn from failure, as long as you push through it. Once you've mastered solving a particular kind of problem, you don't grow by continually doing those same sorts of problems. So add more challenges. You'll know you've added enough when you fail. And you only fail at failing when you don't keep pushing through it.

9. Play. Improvise, brainstorm, rap, rhyme, etc. Once you loosen the constraints on your brain, your subconscious mind will lead you to all sorts of interesting places. If you think "this topic is too serious for that," you're stuck in a rut and will be unable to come up with all sorts of ideas that would occur to you if you played in the mud for a while.

10. Take a break. Your brain continues to chew after you've stopped forcing food into it. Let it chew on its own for a while. If you're feeling brain dead, stop! Sleep on it. Or, better yet, do something totally different from the problem you're working on and THEN sleep.

11. Falsify your cherished notions. You're a Democrat? What if the Republicans are right? You're an atheist? What if there's a God? If you're closed off to ANY avenue of thought, there are things you won't think of.

Thinking is safe. Don't worry: you won't suddenly start throwing litter out the window just because you muse on the possibility that Global Warming might be a lie.

If it feels safer to you, imagine you're writing a science-fiction novel in which Global Warming is a lie. It's not a lie on our planet, but it IS a lie on planet Alpha Prime Zeta. Okay, what are the ramifications on THAT planet?

Do whatever it takes to push through closed mental doors.

Those things that you're super-confident about: slavery is evil; gay marriage should be legalized; one plus one equals two... Those thing aren't wrong. It's good that you're confident about them. But the bad thing about confidence is that it closes mental doors. That's kind of the point: "I don't have to think about that any more. I'm confident that I'm right about it." Allow yourself to say, "I know slavery is evil, but WHAT IF...?"

Your thoughts will never cause slavery to happen. Your thoughts are morally neutral. Stephen King is, by all accounts I've heard, a great guy, but he lets himself imagine people getting dismembered. And no one ever actually gets dismembered because of his thoughts. As long as you feel certain thoughts are wrong, you'll stop yourself from exploring many (possibly) fruitful avenues of thinking. You can't know if they're fruitful or not until you stroll down them.

12. Carry your ideas to their logical conclusions. "I think the sexes should be treated completely equally!" Okay, does that mean we need to abolish separate bathrooms for men and women in the workplace, just as we've abolished separate bathrooms for black people and white people? The goal, when taking your notions to extremes, isn't necessarily to poke holes; it's to test boundaries. In what cases does your idea apply? in what cases does it not apply?

13. Thwart your ego. Ego is almost always the enemy of thought. Most people don't let themselves "go there" -- "there" being to certain mental places -- because "I'm not smart enough."

Which means they're scared of getting their ego bruised by feeling stupid. Fuck that shit! That closes tons and tons of mental doors. You don't need to be smart to think about ANYTHING. You don't need to be right to think about anything. To think, you just need to think. It's okay to fail when you think. It's GOOD to fail. (See item 8.)

To fully embrace this -- and to vanquish ego -- you have to give up thinking in order to prove you're right, to impress your friends and to "be original." Those are all core human urges, and you're never going to rid yourself of them, but try to compartmentalize when your goal is to show off or argue vs when your real goal is to grow mentally or to solve a problem.

If you have convinced yourself that you never think to be right or come off as smart or win points, you're in trouble. We all do that. Admit it. Embrace the fact that you're sometimes going to do it. Know WHEN you're doing it, and stop doing it when it's not appropriate.

14. Think to serve. This is what most helps me thwart my ego. I'm a theatre director and an author. When I direct plays -- as soon as I start hoping the audience will think kindly of ME or be impressed with ME -- I remind myself that it's not about me.

It's about the PLAY. My goal is to SERVE, to serve the play, the story. The story isn't my servant; I'm its servant. If I'm writing a book, my goal is to serve the reader or the subject.

As soon as I redirect my energies that way, my mind expands.

Sites like ask.metafilter.com and quora.com can be great for this, as long as you use them to serve knowledge and not to win arguments, be right or look smart.

15. Don't ever try to be original. That's a mental dead end. When I'm working on a play, I sometimes get the urge to come up with something "cool" or "different." As soon as I feel that pull, I resist. My goal is to tell the story, not to get points for originality. Don't ever try to be cool or original unless THAT'S your acknowledged goal. If your goal is personal growth or problem solving, trying to be original will block you. And the irony is that, by not trying to be original at all, by just honestly working to tackle the problem, you'll wind up BEING original, because your work will be filtered through you, and you are unique in all the world.

Note: it's hard to apply a negative, like "don't be original." When I feel the urge to be original, I sometimes force myself to be derivative. If you're EVER stuck because though you know how to solve a problem, you don't want to solve it the way everyone else does, because that would be "copying," copy!

Short, famous version: "kill all your darlings."

16. Eliminate distractions. Every ounce of energy you're not spending on the problem is ... not spent on the problem. So are you wearing comfortable clothes? Is the lighting okay? Are there distracting noises? Do you have pens and paper and whatever else you need near you? It's a bitch if you have to stop working on a problem because you can't find a pen that works. I don't like to waste money, but I overbuy bens and paper and reference books. I want to be able to reach out and have whatever I need leap into my hand.

17. Study history. It's all happened before; it will all happen again. USE IT! By "history," I mean world history, local history or the history of some specific craft -- whatever is appropriate to your endeavor.

18. Identify rigidity and fluidity. What parts of the structure MUST remain fixed or it ceases to be whatever it is? What parts must be elastic or its not living up to its potential?

Think of yourself as a jazz musician, improvising on "Twinkle, Twinkle, Little Star." What notes MUST be played or the song gets so perverted that it's no longer the song? What parts can be improvised? Are you forcing yourself to hit nails on their heads? Are you forcing yourself to run willy, nilly between those nails?

19. Switch mediums. If you're thinking with prose, draw pictures. If you're lecturing, try miming. Try imposing arbitrary constraints: write about your topic, but force yourself to forgo using all forms of the verb "to be." See http://en.wikipedia.org/wiki/E-Prime

20. Take care of yourself. Exercise and eat well. Get a good night's sleep. There is no brain-body separation. Your brain is part of your body. It's a machine that requires tremendous amounts of energy. Feed it. Care for it. Love it.

the way forward

To understand why "Just the facts, ma'am" doesn't work, imagine moving a dinner forward by saying "Salad, then steak and potatoes, then desert," without actually serving any food. That doesn't count as "moving forward." We haven't earned the right to proceed to the second course until we've tasted the first.

Tasting is sensual, and there's the key. Humans are sensual creatures. If we haven't seen, heard, felt, smelled or tasted a thing, we haven't experienced it. If we haven't experienced it, we can't move forward from it. Narratives aren't simply about moving forward; they are about moving forward from one sensual experience to the next.

When we sensualize our stories, we aren't adding spice to them. Sensation isn't spice; it's the world. It doesn't sit on top of the world. It IS the world. In the world, nothing is general, abstract or featureless. There is no such thing as love. There is only the specific love that Charlie feels for Sarah. And Charlie is not a man. He's a specific man with flaking skin on his shoulders, from a day at the Coney Island beach. And we hear the clack, clack, clack of Sarah's heels on the bathroom floor, which is what makes her an actual woman.

We can infuse our stories with sensuality in many ways, the two main ones being via specific details about what's actually happening and metaphor:

1. He rubbed his palm over the gnarled surface.

2. The ship was bigger than four ocean liners laid end to end.

It's not enough to say "It was a really big ship." That doesn't move the story forward, because "a really big ship" is a cheat. It's like claiming you've actually given your son a birthday present when you've given him socks. Any kid will tell you socks don't count as a present (nor does "money for college"). It's like skipping to sex by saying "foreplay" instead of actually kissing an caressing. We have to earn each forward step by sensualizing the moment we're currently in.

There are a couple of traps to look out for: the first is cliche. "Cold as winter" isn't sensual, because we've heard it too many times. We read it as coldaswinter, and it doesn't spark any sensation in our brains. "Colder than a witches tit" is just as bad. Does anyone actually picture Margaret Hamilton's nipples when they read it? (And if they did, would they get a sensation of coldness?) No. It becomes colderthanawitchestit in their brains. This is why we need to continually search for new, surprising metaphors and details. We can't evoke the hero's strength by mentioning his rippling biceps, but we may be able to do it by mentioning that he lifted a hospital bed as if it was a child's cot.

The other trap is over-sensualizing. Imagine never being able to get to dessert because the chef, unsure that he's really earned the right to move on from the main course, keeps serving you more and more steak. Imagine having to stop and examine each pea in a side of peas and carrots. Enough already! And some aspects of the meal aren't really parts of the meal. They're just scaffolding to make the meal possible. The smudge on the wine glass might be memorable, but it's gratuitous if the point of the experience is the Pino Noir.

So how do we know which details to sensualize and which to just report in the abstract. Is it okay to say "He pulled the letter out of the mailbox," or do we need to describe the mailbox's rusty hinges? That's where a lot of the artistry comes in. It's an aesthetic judgement writers have to learn to make, and most improve at making it over time.

One key is to think about which elements are the actual plot points and which are the glue that holds those points together. Say the key points are that the hero hears the mailman drop letters into the box, reads one of the letters and learns his brother has died. The fact that he had to extract the letters from the mailbox isn't a plot point. It's glue. It may be necessary to include it, so that the reader knows that the fictional world follows the same rules as the real world (in which mailmen stuff letters into mailboxes). Another clue is that the character doesn't need to remember it. When looking back on the moment, years later, he'd probably talk about the letter that changed his life. He wouldn't necessarily recall pulling it from the mailbox.

With these glue-like elements, the first decision is whether we need to include them at all. Can the reader infer the mailbox from the items on either side of it, the mailman and the reading-of-the-letter? If it must be included (for the story to make sense or for rhythmic reasons -- maybe we want to build up suspense by pausing before the hero reads the letter...), can we add a sensual details without slowing down the narrative? Glue shouldn't necessarily be included without sensual details. We just want to make sure that such details don't slow the momentum or make the glue more important and memorable than we want it to be. If we answer all these questions in the negative, then we're free to relate the glue -- the mailbox -- as a naked fact, as just a mailbox.

If it's unimportant but necessary scaffolding, sometimes even the slightest detail is clutter. Is there really a point to "brass doorknob"? Maybe it should just be "doorknob." IF the doorknob needs to be sensualized, it probably needs a detail more stirring than "brass."

That which we sensualize will affect the reader -- it will titillate him, scratch him, stroke him or rub him the wrong way; that which we abstract will link two sensual details together. Link as quickly as possible. We need forks in order to eat, but the meal is about the linguini, not the cutlery.

Wednesday, May 11, 2011

why i'm against redundancy in stories

(I have some theories that elevate linear narratives above other narrative structures, by which I mean that the linear form will most please most readers, probably because readers experience life as "one damn thing after another," but I won't push those theories here. Here, let's just say that I happen to prefer linear narratives, so I'm going to discuss storytelling within the framework of that aesthetic.)

Because I hate the clunky phrase "linear narrative," from here on, I'm going to replace it with the word "story." Please indulge me! I know there are other kinds of stories. But for the duration of this essay, I am using "story" to label just one type of story.

In their purest form, stories move FORWARD CAUSALLY. Event one (at the earliest time in the story) causes event two (at a later time), which causes event three (at a still later time) and so on. Readers have this basic template in their heads. It's a pleasing template, and they notice when a story veers from it, which isn't to say that all veers are bad veers.

Readers don't mind veers -- in fact they sometimes enjoy them -- when they understand the purpose of the veering. For instance if, in a story, Fred goes to a cafe, meets Alice, and then leaves to go to work while Alice remains, the reader will understand (if it's clear Alice is an important character) why the story stays with her and then, later, travels back in time to catch up with what Fred saw on the way to his office. Such a story breaks from simple linearity, but for a clear reason: it can't follow Fred and Alice at the same time, because they are in different locations.

When a writer handles this badly -- say if he makes the Alice subplot boring -- most readers get irritated. They say, "What's the point of all that Alice stuff? Let's get back to Fred!"

Partly, the readers are upset because they feel the writer has broken linearity FOR NO GOOD REASON. (As I am only granted access to my own head, I can't prove this paragraph's assertion. It's a guess, based on some navel gazing and the assumption that many other readers are similar to me. My base assumption is that readers prefer linearity by default but are willing to accept breaks from the timeline if they understand the reasons for the breaks. Readers respond to fictional timelines as they often do to real-life ones: if your friend says, "Let's go to the park," but then insists you take a long, circuitous route there, you will likely be irked or confused if you don't know why your friend wants to walk that way. But if he says, "I know this is not the fastest way to the park, but if we go this way, we'll walk past this really cool building," you may be willing -- you may even enjoy -- the detour. We don't want our time wasted, in life or art.)

Readers also tend to respond well to cliffhangers, which are, by nature, breaks from linearity. Again, they are acceptable (and even fun, in an agonizing sort of way), because (a) we understand the reason for them, which is that the writer is teasing us, and (b) we enjoy that reason -- we like to be teased. (Also, (c) we know we'll get back to the timeline once the tease is over!)

Some readers hate cliffhanger. These readers, I think, have an extremely low tolerance for breaks in timelines: "Dammit! I want to know what's going to happen next?" I have a titillating love-hate relationship with cliffhangers. Since I so love the linear form, I REALLY want to know what's going to happen next. It's agonizing when the writer refuses to tell me. But it's a sweet agony, because I know he's going to tell me in the end. The writer is, in effect, a lover who is flirting with me by temporarily withholding sex.

Readers don't mind stepping off the relentless, forward-moving conveyer belt if there's something worth lingering over. In a strict causal sense, all we need to know is that Claire is crying. But it might be sweet to pause for a moment and watch a tear trickle down her cheek... and then to move on. Most of us like pausing to look at flowers on our way to the supermarket. But, eventually, we want to actually get to the supermarket. So, again, linearity can be stretched, paused, meandered from, etc., as long as we have some sense of the purpose (to watch the tear) and some reason to trust that the author will get us back on track soon.

My next assumption is that we can't attend to two thoughts at once. If Mike has two hobbies, stamp-collecting and baseball, he can talk about one and then the other. He can't talk about both of them at the same time. He can shuttle very quickly between them, but that's still one and then the other -- not both at once. Similarly, a story is either moving forward or it isn't. It can't both linger and move forward at the same time. If it's examining the tear, it's not moving forward; if it's moving forward, it's not examining the tear.

Consider this story:

"Once upon a time, Bill was in love with Mary. He asked her on a date, but she rejected him. So he tried to forget her by moving far away, to France. So he tried to forget her, by moving far away, to France. But, in the end, he was always haunted by his memories."

As you can see, I inserted a very clunky and obvious bit of redundancy. If that doubling is a problem, why is it a problem? It's a problem because it breaks linearity for no good reason. If you're reading the same sentence twice, then you're clearly not moving forward. And the stutter, in this case, isn't interesting. It gives you no new information. It's like a bore who insists on telling you a joke he's told you before. You have to endure it before you can move on.

So, again, in this obvious example, my point is that redundancy is unpleasant because it breaks linearity. We ask, "What's the point of repeating the same sentence twice?"

Actually, that question is incomplete. Things can only have "points" in some context. It's meaningless to ask "What's the point of a hammer?" A hammer has -- or doesn't have -- a point in contexts such as doing-carpentry or baking-a-cake. "What's the point of bringing a hammer to the cinema?" (The context is the cinema.) "What's the point of repeating information if the goal is to move forward -- unless there's some compelling reason to pause or sidetrack?"

Here's another example:

"Once upon a time, there was a huge, really big castle."

This irritates me for the same reason the last example irritated me. Even though "huge" and "really big" aren't literally the same words, they are close enough, and "really big" doesn't add any new information that "huge" didn't already add. Since you can't experience two things at once, when you're experiencing the repetitiveness of "really big," you're not moving forward. And so the pleasure of linearity is broken "for no good reason," which feels unpleasant.

Or does it? Some readers will complain that I'm nit-picking in this case. "Okay, maybe the writer could have omitted 'really big,' but Jesus Christ! It's just two words! Just skim past them!"

Other readers will (truthfully) say, "those extra two words didn't bother me." Which leads us to an interesting point about aesthetics: as soon as you posit an aesthetic rule, even if people agree with it in principle, they won't always care about it -- or even be affected by it -- in specific cases.

Let's say that we come up with an aesthetic rule that notes in songs should be sung "on key." This is a rule many people already agree with. Off-key notes sound bad. But if, while you're listening to a song, a dog barks right at the moment the singer went off key, you won't hear her lapse, so you genuinely won't be bothered by her violation of the "don't sing off key" rule.

You also might not be bothered by it if you happen to not be paying close attention, and you also might not be bothered by it if the song is deeply meaningful to you (or the singer is beautiful). You don't have mental bandwidth to perceive the bad note AND some competing pleasure. (Most of us have, at some point, been so dazzled by special effects -- or sexy actors -- in a movie that we haven't been bothered by its lackluster plot.) It's even possible that, for a moment, you WERE bothered by the off-key note, but the rest of the song was so good that you forgot that you ever were bothered.

(There also tends to be, for many of us, a social aspect of art -- a real or imagined relationship between us and the artist. People tend to "forgive" artists for lapses. "Well, she sang off key, but she has a cold, so it's understandable..." What is one actually feeling when one notices an artistic blunder but forgives the blunderer? One might be completely honest, in such a case, if one says, "The blunder didn't bother me." Being "bothered" is a negative emotion. If one doesn't feel anything negative -- because one is experiencing warm feelings of forgiveness -- then one likely isn't bothered.)

But rules are still useful. When we tell our children to look both ways before they cross the street, we don't mean, "Because if you don't, you will DEFINITELY be run over TODAY, when you cross this SPECIFIC STREET."

We mean looking-both-ways is a good rule of thumb to follow, in general, because if you don't follow it, at some point you may be hit by a car. We also realize that kids shouldn't try to apply to rule on a case-by-case basis, because they don't have all the information they need to know whether any specific case is one when it's worth applying the rule. For instance, a particular street may look deserted, but you never know when a car will suddenly zoom out of a driveway.

Aesthetic rules work this way, too. Yes, a reader might be able to ignore -- in fact, he might even not notice -- a tiny bit of redundancy. On the other hand, he might notice it and be bothered by it. In general, it's just good to avoid redundancy. That way, there's no chance a reader will be bothered by it.

(If you don't notice a small blemish in a work of art -- say you don't notice a little mustard stain on a dress (or you do notice it but aren't bothered by it) -- that doesn't mean you're inferior in any way to someone who does notices and is bothered by it. And if you do notice it, you're not inferior to people who don't. It's THERE! Anything perceivable may or may not be noticed by a given person.

It's reasonable to say that the dress would be better without the stain, even if the stain doesn't bother some people. The people who are bothered will stop being bothered if the stain is removed, and the people who were never bothered will remain unbothered, because it's not the case that they enjoyed the stain: they just weren't negatively affected by it. By removing the stain, the dress has a positive effect on more people than it had with the stain. And no one is bothered by the stain being removed.)

Is all redundancy bad? No. Like all breaks from linearity, redundancy can be pleasurable if the reader senses a purpose for it: and if the pleasure that purpose brings exceeds the pain of not-being-able-to-move-forward.

"Once upon a time, there was a giant. He was big, and I mean REALLY big. You may be thinking of him as big-as-a-house or something, but he was way bigger than that. His knees brushed against the clouds. He was one huge mother of a giant!"

Here, redundancy is obviously and purposefully slathered onto the prose in order to evoke a feeling of immensity.

What's important for artists to understand is the effect that redundancy always has: it breaks linearity. It's also important for artists to understand that audiences crave and expect linearity. There are often fun effects artists can create by thwarting and subverting expectations. They should just be aware of what they're doing. If redundancy is creating an interesting effect, great; if redundancy is just in the story because it hasn't been pruned out, it's a weed. It's a mustard stain on a dress.

The subtlest -- and therefor most-treacherous, because it's hardest to weed out -- form of redundancy is when the repeated information is coming from two different sources. This tends to happen most often in "multi-media" productions, such as film and theatre (and comic books, etc.) If a story is being told through a combination of dialog, images, sounds, etc., then it's possible (even probable) that redundancy will creep in when two different aspects of a work meet.

For instance, in a play, a character might say, "That's the reddest car I've ever seen." If the set also contains a red car, we have redundancy -- and not interesting, meaningful redundancy. Remember, my base assumption (which you can disagree with) is that our brains can't attend to two things at once. So even if the line is spoken simultaneously with lights coming up on the car, the audience will still experience the line first and then the visual second (or the other way around). They will experience "red car ... red car," which breaks linearity.

Now, you may be thinking, "Well, what is the director supposed to do? The play calls for a red car and there's a line about the car being red." (One thing the director COULD do is cut the line, but let's assume that, maybe for legal reasons, he doesn't have that option.)

There may be nothing the director can do. One can't always solve all problems. And this might be one of those problems that the audience "forgives" or doesn't notice.

But it's still a problem. It's still a violation of my aesthetic rule. One's goal, when creating art, should be to do one's best. You don't go to hell if you allow redundancy into your art. You're not a "bad artist" if you don't solve lapses that are out of your control. That's not my point. I am not judging anyone. I am just trying to explain why I think redundancy is -- or can be -- a problem, and why, as an artist, you should try your best to root it out.

This multi-media quagmire becomes worse when, as is usually the case, multiple artists are collaborating. Both the costume designer and the playwright are trying to tell the same story. It is very likely that rather than dividing up aspects of the story between them, they will overlap and both give some of the same information. The natural person to watch for this is the director. He should say, "We don't have to tell that part of the story with costumes, because we're already telling it with words."

(Sometimes it's fun to experience the same information in different ways. So it may be that HEARING ABOUT a red car and SEEING a red car doesn't feel like redundancy. But be very careful -- if you share my aesthetic -- because this can quickly become an excuse. ANY time two different aspects of a multi-media piece are giving the same information, they are giving it in two different ways.

Imagine someone asked you to do the dishes and then held up a sign with "do the dishes" on printed on it. That would just feel redundant -- and possibly insulting. My general rule of thumb is to not excuse redundancy just because they same information is being communicated in two different ways. That, itself, is not enough to excuse it.)

If you agree with me that redundancy is (or can be) a problem (or if you don't, but you enjoy trying on other people's aesthetic shoes, even if those shoes don't fit), you may enjoy grappling with what I call The "Macbeth" problem: you're a director, staging "Macbeth," and -- like me -- you're against redundancy. Given that, how do you present the witches?

In the play, when Banquo sees the witches, he says...

What are these

So wither'd and so wild in their attire,

That look not like the inhabitants o' the earth,

And yet are on't? Live you? or are you aught

That man may question? You seem to understand me,

By each at once her chappy finger laying

Upon her skinny lips: you should be women,

And yet your beards forbid me to interpret

That you are so.

If, before or after he says this, the audience sees wildly dressed, women with beards (and chapped lips, etc.), there's redundancy happening.

Maybe it wouldn't bother you, but if you were hired to craft a production that would please me, what would you do?

You could cut Banquo's speech, but it's a pretty good speech. Most Shakespeare lovers -- myself included -- love the plays at least partly for their poetry. Cutting this speech is a little like cutting "Send in the Clowns" from "A Little Night's Music," but it is a possible solution.

But if you kept the speech, is there any way that you could present the witches that wouldn't be redundant? (You're not allowed to eliminate redundancy by injecting confusion. If the witches look young, beautiful women, would that "work" or would it just be perplexing, given Banquo's description of them? As much as I hate redundancy, I prefer it to confusion.)

Remember: not all redundancy problems are solvable (which doesn't make the non-solvable ones "not problems.") Is this one solvable or not?

Since I haven't yet had the pleasure of directing "Macbeth," I haven't solved (or tried to solve) this problem, though I have some ideas about how I might solve it.

But if I was directing the play, the problem would pop into my head, because I consider part of my job, as a director, to be rooting out redundancy (and keeping the play chugging down its linear track, unless there's a compelling reason to veer away from the track).

As an editor mercilessly strikes out unnecessary adverbs (even if leaving them in wouldn't bother most readers), I mercilessly cut -- or try to cut -- all redundancy. I look at each moment in the play and ask myself "Is there any redundancy here?" and, if there is, I kill it if I can -- unless it's serving a clear purpose.

It's hard, because it's not always just a matter of "kill your own darlings." I am often forced to kill other people's darlings. That hat the costume designer worked on for a month... or that little thumbs-up gesture the actor loves so much (which isn't needed, because he also says, "Good idea!")... They are darling, darling darlings. But they're redundant darlings. So they have to go.